Reasons to Dockerize a Rails application

I had to hear Docker explained many times before I finally grasped why it’s useful.

I’ll explain in my own words why I think Dockerizing my applications is something worth exploring. There are two benefits that I recognize: one for the development environment and one for the production environment.

Development environment benefits

When a new developer joins a team, that developer has to get set up with the team’s codebase(s) and get a development environment running. This can often be time-consuming and tedious. I’ve had experiences where getting a dev environment set up takes multiple days. If an application is Dockerized, spinning up a dev environment can be as simple as running a single command.

In addition to simplifying the setup of a development environment, Docker can simplify the running of a development environment. For example, in addition to running the Rails server, my application also needs to run Redis and Sidekiq. These services are listed in my Procfile.dev file, but you have to know that file is there, and you need to know to start the app using foreman start -f Procfile.dev. With Docker you can tell it what services you need to run and Docker will just run the services for you.

Production environment benefits

There are a lot of different ways to deploy an application to production. None of them is particularly simple. As of this writing, my main production application is deployed to AWS using Ansible for infrastructure management. This is nice in many ways but it’s also somewhat duplicative. I’m using one (currently manual) way to set up my development environment and then another (codified, using Ansible) ways to set up my production environment.

Docker allows me to set up both my development environment and production environment the same way, or at least close to the same way. Once I have my application Dockerized I can use a tool like Kubernetes to deploy my application to any cloud provider without having to do a large amount of unique infrastructure configuration myself the way I currently am with Ansible. (At least that’s my understanding. I’m not at the stage of actually running my application in production with Docker yet.)

What we’ll be doing in this tutorial

In this tutorial we’ll be Dockerizing a Rails application using Docker and a tool called Docker Compose.

The Dockerization we’ll be doing will be the kind that will give us a development environment. Dockerizing a Rails app for use in production hosting will be a separate later tutorial.

My aim for this tutorial is to cover the simplest possible example of Dockerizing a Rails application. What you’ll get as a result is unfortunately not robust enough to be usable as a development environment as-is, but will hopefully serve as a good exercise to build your Docker confidence and to serve as a good jumping-off point for creating a more robust Docker configuration.

In this example our Rails application will have a PostgreSQL database and no other external dependencies. No Redis, no Sidekiq. Just a database.

Prerequisites

I’m assuming that before you begin this tutorial you have both Docker and Docker Compose installed. I’m assuming you’re using a Mac. Nothing else is required.

If you’ve never Dockerized anything before, I’d recommend that you check out my other post, How to Dockerize a Sinatra application, before digging into this one. The other post is simpler because there’s less stuff involved.

Fundamental Docker concepts

Let’s say I have a tiny Dockerized Ruby (not Rails, just Ruby) application. How did the application get Dockerized, and what does it mean for it to be Dockerized?

I’ll answer this question by walking sequentially through the concepts we’d make use of during the process of Dockerizing the application.

Images

When I run a Dockerized application, I’m running it from an image. An image is kind of like a blueprint for an application. The image doesn’t actually do anything, it’s just a definition.

If I wanted to Dockerize a Ruby application, I might create an image that says “I want to use Ruby 2.7.1, I have such-and-such application files, and I use such-and-such command to start my application”. I specify all these things in a particular way inside a Dockerfile, which I then use to build my Docker image.

Using the image, I’d be able to run my Dockerized application. The application would run inside a container.

Containers

Images are persistent. Once I create an image, it’s there on my computer (findable using the docker images command) until I delete it.

Containers are more ephemeral. When I use the docker run command to run an image, part of what happens is that I get a container. (Containers can be listed using docker container ls.) The container will exist for a while and then, when I kill my docker run process, the container will go away.

The difference between Docker and Docker Compose

One of the things that confused me in other Rails + Docker tutorials was the usage of Docker Compose. What is Docker Compose? Why do we need to use it in order to Dockerize Rails?

Docker Compose is a tool that lets you Dockerize an application that’s composed of multiple containers.

When in this example we Dockerize a Rails application that uses PostgreSQL, we can’t use just one image/container for that. We have to have one container for Rails and one container for PostgreSQL. Docker Compose lets us say “hey, my application has a Rails container AND a PostgreSQL container” and it lets us say how our various containers need to talk to each other.

The files involved in Dockerizing our application

Our Dockerized Rails application will have two containers: one for Rails and one for PostgreSQL. The PostgreSQL container can mostly be grabbed off the shelf using a base image. Since certain container needs are really common—e.g. a container for Python, a container for MySQL, etc.—Docker provides images for these things that we can grab and use in our application.

For our PostgreSQL need, we’ll grab the PostgreSQL 11.5 image from Docker Hub. Not much more than that is necessary for our PostgreSQL container.

Our Rails container is a little more involved. For that one we’ll use a Ruby 2.7.1 image plus our own Dockerfile that describes the Rails application’s dependencies.

All in all, Dockerizing our Rails application will involve two major files and one minor one. An explanation of each follows.

Dockerfile

The first file we’ll need is a Dockerfile which describes the configuration for our Rails application. The Dockerfile will basically say “use this version of Ruby, put the code in this particular place, install the gems using Bundler, install the JavaScript dependencies using Yarn, and run the application using this command”.

You’ll see the contents of the Dockerfile later in the tutorial.

docker-compose.yml

The docker-compose.yml file describes what our containers are and how they’re interrelated. Again, we’ll see the contents of this file shortly.

init.sql

This file plays a more minor role. In order for the PostgreSQL part of our application to function, we need a user with which to connect to the PostgreSQL instance. The only way to have a user is for us to create one. Docker allows us to have a file called init.sql which will execute once per container, ever. That is, the init.sql will run the first time we run our container and never again after that.

Dockerizing the application

Start from this repo called boats.

$ git clone git@github.com:jasonswett/boats.git

The master branch is un-Dockerized. You can start here and Dockerize the app yourself or you can switch to the docker branch which I’ve already Dockerized.

Dockerfile

Paste the following into a file called Dockerfile and put it right at the project root.

# Use the Ruby 2.7.1 image from Docker Hub

# as the base image (https://hub.docker.com/_/ruby)

FROM ruby:2.7.1

# Use a directory called /code in which to store

# this application's files. (The directory name

# is arbitrary and could have been anything.)

WORKDIR /code

# Copy all the application's files into the /code

# directory.

COPY . /code

# Run bundle install to install the Ruby dependencies.

RUN bundle install

# Install Yarn.

RUN curl -sS https://dl.yarnpkg.com/debian/pubkey.gpg | apt-key add -

RUN echo "deb https://dl.yarnpkg.com/debian/ stable main" | tee /etc/apt/sources.list.d/yarn.list

RUN apt-get update && apt-get install -y yarn

# Run yarn install to install JavaScript dependencies.

RUN yarn install --check-files

# Set "rails server -b 0.0.0.0" as the command to

# run when this container starts.

CMD ["rails", "server", "-b", "0.0.0.0"]

docker-compose.yml

Create another file called docker-compose.yml. Put this one at the project root as well.

# Use the file format compatible with Docker Compose 3.8

version: "3.8"

# Each thing that Docker Compose runs is referred to as

# a "service". In our case, our Rails application is one

# service ("web") and our PostgreSQL database instance

# is another service ("database").

services:

database:

# Use the postgres 11.5 base image for this container.

image: postgres:11.5

volumes:

# We need to tell Docker where on the PostgreSQL

# container we want to keep the PostgreSQL data.

# In this case we're telling it to use a directory

# called /var/lib/postgresql/data, although it

# conceivably could have been something else.

#

# We're associating this directory with something

# called a volume. (You can see all your Docker

# volumes by running +docker volume ls+.) The name

# of our volume is db_data.

- db_data:/var/lib/postgresql/data

# This copies our init.sql into our container, to

# a special file called

# /docker-entrypoint-initdb.d/init.sql. Anything

# at this location will get executed one per

# container, i.e. it will get executed the first

# time the container is created but not again.

#

# The init.sql file is a one-line that creates a

# user called (arbitrarily) boats_development.

- ./init.sql:/docker-entrypoint-initdb.d/init.sql

web:

# The root directory from which we're building.

build: .

# This makes it so any code changes inside the project

# directory get synced with Docker. Without this line,

# we'd have to restart the container every time we

# changed a file.

volumes:

- .:/code:cached

# The command to be run when we run "docker-compose up".

command: bash -c "rm -f tmp/pids/server.pid && bundle exec rails s -p 3000 -b '0.0.0.0'"

# Expose port 3000.

ports:

- "3000:3000"

# Specify that this container depends on the other

# container which we've called "database".

depends_on:

- database

# Specify the values of the environment variables

# used in this container.

environment:

RAILS_ENV: development

DATABASE_NAME: boats_development

DATABASE_USER: boats_development

DATABASE_PASSWORD:

DATABASE_HOST: database

# Declare the volumes that our application uses.

volumes:

db_data:

init.sql

This one-liner is our third and final Docker-related file to add. It will create a PostgreSQL user for us called boats_development. Like the other two files, this one can also go at the project root.

CREATE USER boats_development SUPERUSER;

config/database.yml

We’re done adding our Docker files but we still need to make one change to the Rails application itself. We need to modify the Rails app’s database configuration so that it knows it needs to be pointed at a PostgreSQL instance running in the container called database, not the same container the Rails app is running in.

default: &default

adapter: postgresql

encoding: unicode

database: <%= ENV['DATABASE_NAME'] %>

username: <%= ENV['DATABASE_USER'] %>

password: <%= ENV['DATABASE_PASSWORD'] %>

port: 5432

host: <%= ENV['DATABASE_HOST'] %>

pool: <%= ENV.fetch("RAILS_MAX_THREADS") { 5 } %>

timeout: 5000

development:

<<: *default

test:

<<: *default

production:

<<: *default

Building and running our Dockerized application

Run the following command to build the application we’ve just described in our configuration files.

$ docker-compose build

Once that has successfully completed, run docker-compose up to run our application’s containers.

$ docker-compose up

The very last step before we can see our Rails application in action is to create the database, just like we would if we were running a Rails app without Docker.

$ docker-compose run web rails db:create

$ docker-compose run web rails db:migrate

The docker-compose run command is what we use to run commands inside a container. Running docker-compose run web means “run this command in the container called web“.

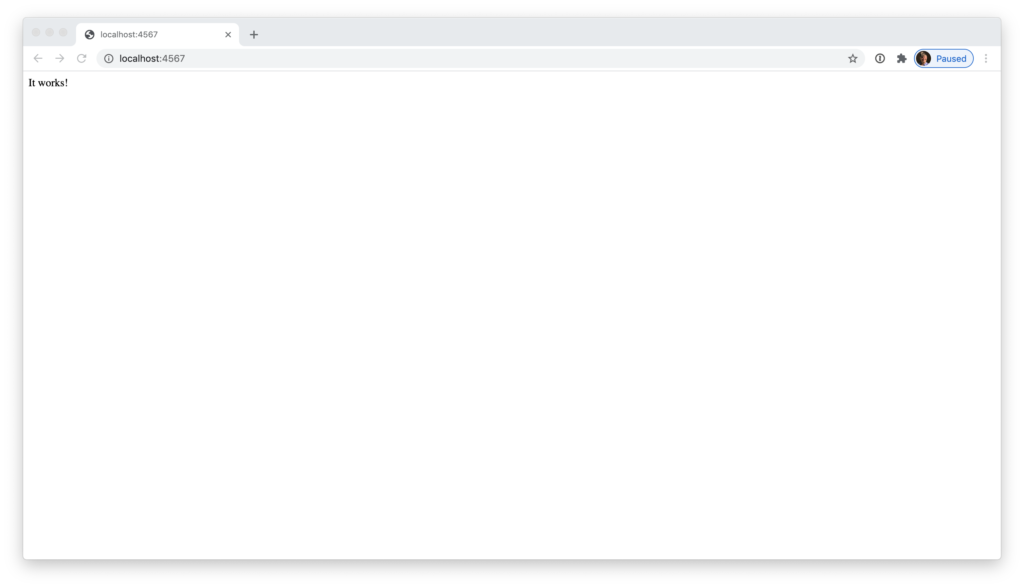

Finally, open http://localhost:3000 to see your Dockerized Rails application running in the browser.

$ open http://localhost:3000

Congratulations. You now have a Dockerized Rails application.

If you followed this tutorial and it didn’t work for you, please leave a comment with your problem, and if I can, I’ll help troubleshoot. Good luck.